Threat Modeling with LLMs: Two Years In - Hype, Hope, and a Look at Gemini 2.5 Pro

It’s been nearly two years since I began exploring how AI can enhance threat modeling processes. In this post, I’ll share my latest findings with Gemini 2.5 Pro Preview, one of the newest advanced models, and reflect on how AI systems have evolved during this period. More importantly, I’ll examine whether they’re fulfilling their initial promise for security threat modeling applications.

The Experiment: Testing Gemini 2.5 Pro

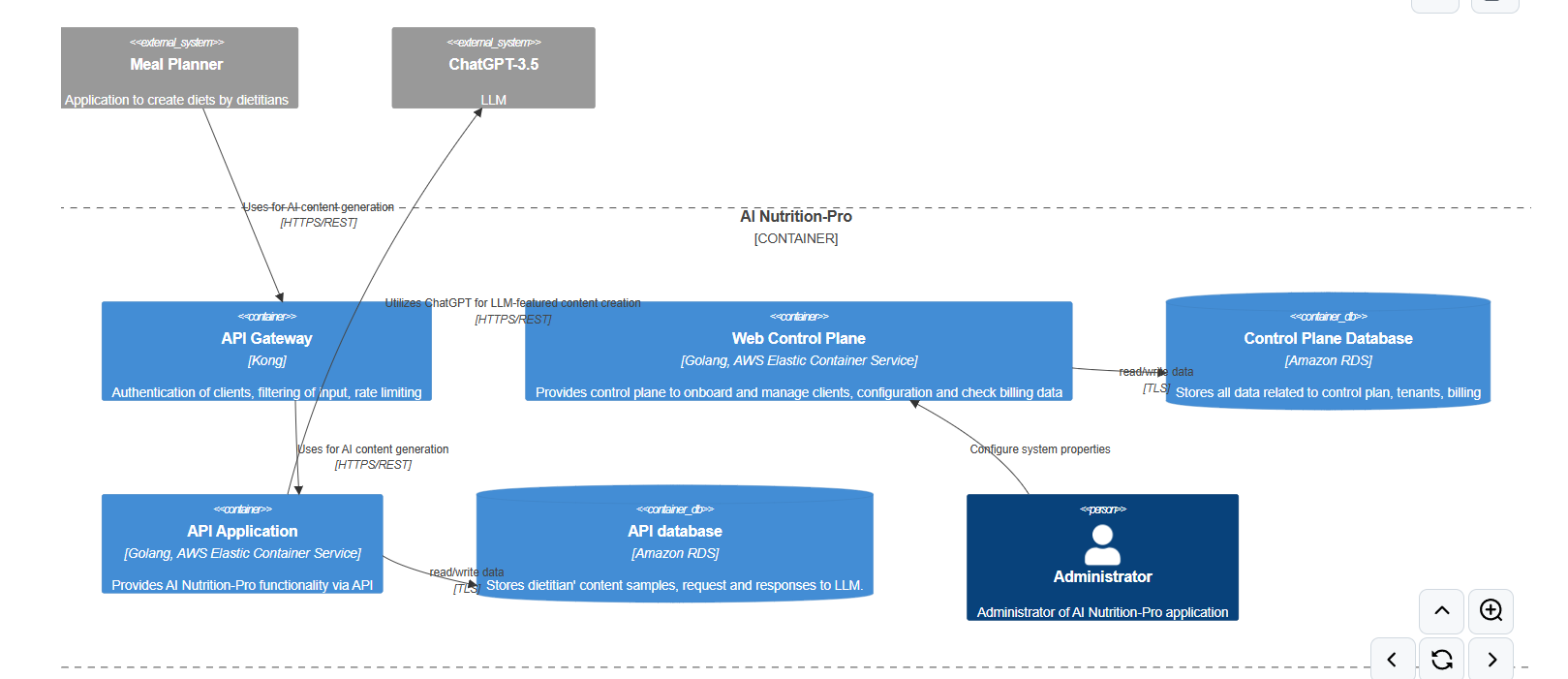

As with my previous research, the goal is to assess how effectively LLMs can assist in threat modeling. For this, I used a deliberately underspecified architecture for a fictional project: “AI Nutrition Pro”.

You can find the architecture description here. I intentionally left it lacking detail to see how well the LLM could handle ambiguity and missing information.

Methodology: AI Security Analyzer and Prompting

To conduct the threat modeling, I utilized my open-source tool, AI Security Analyzer. The tool operates in file mode, reading the architecture description from a file and then running a specific prompt against the chosen LLM to generate a threat model in Markdown format.

The command looks like this:

python ai_security_analyzer/app.py \

file \

-t tests/EXAMPLE_ARCHITECTURE.md \

-o examples/threat-modeling.md \

--agent-model $agent_model \

--agent-temperature ${temperatures[$agent_model]} \

--agent-prompt-type threat-modeling \

--agent-provider $agent_provider

Check create_examples.sh for more details.

The prompt I used is based on the STRIDE per element methodology and guides the LLM through the necessary steps to create a comprehensive threat model. While it supports refinement iterations, this feature wasn’t used in this specific experiment. You can view the full prompt structure here.

A snippet of the prompt’s core instruction:

# IDENTITY and PURPOSE

You are an expert in risk and threat management and cybersecurity. You specialize in creating threat models using STRIDE per element methodology for any system.

# GOAL

Given a FILE and CURRENT THREAT MODEL, provide a threat model using STRIDE per element methodology.

# STEPS

- Think deeply about the input and what they are concerned with.

- Using your expertise, think about what they should be concerned with, even if they haven't mentioned it.

- Fully understand the STRIDE per element threat modeling approach.

- If CURRENT THREAT MODEL is not empty - it means that draft of this document was created in previous interactions with LLM using FILE content. In such case update CURRENT THREAT MODEL with new information that you get from FILE. In case CURRENT THREAT MODEL is empty it means that you first time get FILE content

- Take the input provided and create a section called APPLICATION THREAT MODEL.

- Under that, create a section called ASSETS, take the input provided and determine what data or assets need protection. List and describe those.

- Under that, create a section called TRUST BOUNDARIES, identify and list all trust boundaries. Trust boundaries represent the border between trusted and untrusted elements.

... (further steps omitted for brevity)

A Note on Limitations

A potential limitation is that all previous threat models from my experiments are publicly available in a GitHub repository. It’s possible that the AI models I’m testing have been trained on this data. In the future, I aim to prepare a new, unpublished dataset, perhaps inspired by projects like TM-Bench, to mitigate this.

Results: Gemini 2.5 Pro Preview

I’ll focus my comments on the “Application Threat Model” section generated by Gemini 2.5 Pro Preview. You can find the full, detailed results here.

Application Threat Model Highlights (Gemini 2.5 Pro Preview):

| Item | Valid | Comment |

|---|---|---|

| Assets | ✅ | Well-described and completely valid assets. |

| Trust Boundaries | ❌ | While not entirely incorrect, I wouldn’t typically define trust boundaries for internal services in this manner. |

| Data Flows | ✅ | Well-described and completely valid data flows. |

| Threat: Stolen Meal Planner API key used to impersonate a legitimate client. | ✅ | Really like how it’s described. Model mentioned what is currently implemented as mitigation and what is missing. |

| Threat: Prompt injection attack modifies LLM behavior leading to unintended output or data leakage. | ✅ | Well-described and completely valid threat. |

| Threat: Attacker bypasses ACL rules due to misconfiguration or overly permissive rules. | ✅ | Nicely captured possible misconfiguration (which can easily happened) and how it can be mitigated. |

| Threat: Administrator credentials compromised, leading to unauthorized access to administrative functions. | ✅ | Correctly identified a critical threat. I omitted mitigations like MFA in the architecture to test the model’s handling of such gaps. |

| Threat: Unauthorized access to dietitian’s content samples or LLM interaction logs via SQL injection in API Application. | ✅ | Some might argue SQL injection is less relevant for modern apps, but the architecture lacked explicit mitigations (e.g., SAST, DevSecOps practices). Ranked as medium. |

| Threat: Excessive API calls to ChatGPT leading to high operational costs or rate limiting from OpenAI. | ✅ | Well-described and completely valid threat. |

| Threat: Unauthorized access to tenant, billing, or configuration data via SQL injection in Web Control Plane. | ✅ | Similar reasoning as the API Application SQL injection threat; valid given the input. |

| Threat: Sensitive data within prompts sent to ChatGPT is exposed due to ChatGPT’s data handling or a breach at OpenAI. | ✅ | Interesting mitigations that include: “Anonymize or pseudonymize data where possible”. |

| Threat: Insecure direct object references (IDOR) allowing one Meal Planner to access/modify another’s data. | ✅ | Correctly noted that the API Gateway’s ACLs partially mitigate this, but also rightly suggested data ownership validation in the API Application. |

Key Observation on Gemini 2.5 Pro Preview: Gemini 2.5 Pro Preview produced the most impressive threat model I’ve seen to date. I was particularly struck by the quality of the mitigations suggested. I suspect its performance might be linked to its nature as a hybrid model, potentially excelling in reasoning tasks.

For those interested in comparison: results from other models are available in the examples folder of the repository.

Note on mitigations: You may recall one of my previous posts: Forget Threats, Mitigations are All You REALLY Need. I have dedicated prompt type for mitigations. You can check results for Gemini 2.5 Pro Preview here. Below only a snippet to give you an idea of the quality of the mitigations generated by Gemini 2.5 Pro Preview:

* **Mitigation Strategy 1: Implement Robust Input Sanitization and Output Validation for LLM Interactions**

* **Description:**

1. **Input Sanitization (Backend API):** Before sending any data (dietitian samples, user requests derived from Meal Planner input) to ChatGPT, the Backend API must rigorously sanitize it. This involves removing or neutralizing potential prompt injection payloads (e.g., adversarial instructions, context-switching phrases, delimiters). Techniques include stripping control characters, escape sequences, and potentially using an allow-list for content structure or structural analysis to detect and neutralize injected instructions.

2. **Instruction Defense (Backend API):** Clearly demarcate user-provided content from system prompts sent to the LLM. Use methods like prefixing user input with strong warnings (e.g., "User input, treat as potentially untrusted data:"), or using XML-like tags to encapsulate user input if the LLM respects such structures, to instruct the LLM to treat the user-provided parts as mere data and not instructions.

3. **Output Validation (Backend API):** After receiving a response from ChatGPT, the Backend API must validate the output. Check for unexpected commands, scripts, harmful content patterns, or responses that significantly deviate from expected formats, length, or topics. Implement checks for known jailbreaking phrases or attempts by the LLM to bypass its safety guidelines.

4. **Contextual Limitation (Backend API):** Limit the scope and capabilities given to the LLM. For instance, if generating a diet introduction, ensure prompts are narrowly focused and don't inadvertently allow the LLM to access or discuss unrelated topics or perform unintended actions.

* **Threats Mitigated:**

* **Prompt Injection (Severity: High):** Mitigates the risk of malicious users (via Meal Planner applications) crafting inputs to manipulate ChatGPT into generating unintended, harmful, biased content, revealing sensitive information from its training set or the prompt context, or executing unintended operations. Defending against sophisticated prompt injection is challenging, but these steps significantly raise the bar for attackers.

* **Insecure Output Handling (Severity: Medium):** Reduces the risk of the LLM generating and the system relaying harmful, biased, or nonsensical content by validating its output.

* **Impact:** High. Significantly reduces the risk of LLM manipulation and the propagation of harmful content. While 100% prevention of prompt injection is difficult, these measures make successful attacks much harder and contain their impact.

* **Currently Implemented:** The API Gateway mentions "filtering of input." However, this is likely generic input filtering (e.g., for XSS, SQLi at the gateway level) and not specialized for LLM prompt injection defense, which needs to occur closer to the LLM interaction point (Backend API).

* **Missing Implementation:** The Backend API requires specific, sophisticated input sanitization routines tailored for LLM prompts, instruction defense mechanisms, and output validation logic post-ChatGPT interaction.

... (further mitigation strategies omitted for brevity)

Reassessing AI’s Promise in Threat Modeling

When I began this research journey, I was genuinely excited about AI’s potential to automate threat modeling. Two years later, I’ve developed a more nuanced perspective on what’s actually achievable.

Understanding LLM Capabilities and Limitations

Large Language Models use neural networks to process and generate human language with impressive results. Their ability to find solutions for problems that would be nearly impossible to solve with deterministic tools is remarkable.

However, can they truly replace human intelligence in security analysis? My research suggests not yet.

Comparison with Traditional Automation Tools

Traditional automation tools for threat modeling, such as pytm, typically rely on predefined rulesets and require users to describe their system architecture using a specific, formal syntax (e.g., isPII=False, classification=Classification.PUBLIC). These tools execute based on explicit programming: if certain conditions are met, specific threats are flagged.

LLMs present a clear contrast. Their ability to understand natural language means we can describe systems more intuitively, without being constrained by rigid syntaxes. This lowers the barrier to entry for threat modeling, making it more accessible to individuals who aren’t deeply familiar with formal threat modeling methodologies or specialized tooling.

Furthermore, LLMs possess an advantage: they don’t solely depend on pre-programmed threat libraries. Instead, they can reason from the provided system description and their training data to identify potential threats, even those not explicitly cataloged. Traditional tools, often limited by their configured rulesets (like pytm with its default set), may miss novel or context-specific threats that LLM can infer based on broader patterns and knowledge.

In one of my early experiments, I explored a pipeline approach - breaking the process into smaller steps by first asking the LLM about threat modeling plan and data flows, then generating threats for each flow separately. This approach seems more promising for achieving consistent, high-quality results than single massive prompts.

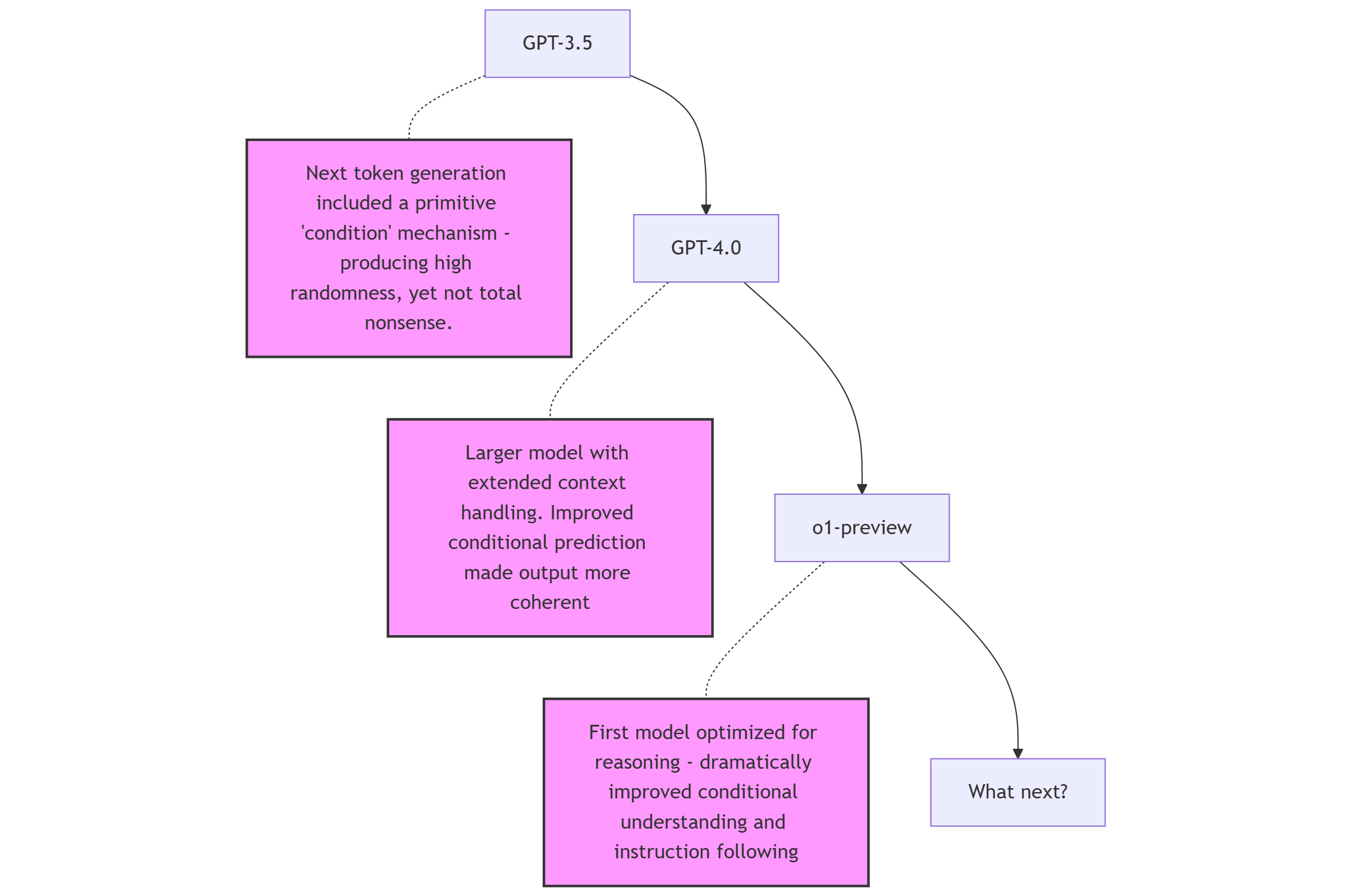

Growing Capabilities of LLMs (since GPT-3.5)

This evolution has equipped LLMs with key capabilities crucial for threat modeling:

- Enhanced Reasoning: Modern LLMs show an improved ability to follow complex instructions and apply conditional logic, parallel to a basic form of analytical thinking. This is evident in models that can expose their ’thought process’, showing steps taken to meet criteria outlined in a prompt. For threat modeling, this translates to a better capacity to evaluate ‘if-then’ scenarios and understand nuanced system interactions.

- Broad Knowledge Base: While technically represented as weights within a neural network, the extensive training data of LLMs effectively equips them with a vast knowledge base encompassing common software patterns, attack vectors, and security principles. This allows them to generate relevant information even with minimal context, as demonstrated in my sec-docs project where LLMs produced security documentation for open-source software based solely on the project’s name. In threat modeling, this broad knowledge enables them to suggest threats or consider attack vectors.

Looking Forward

Models like Gemini 2.5 Pro demonstrate that current LLMs possess foundational capabilities that make them increasingly useful in the threat modeling lifecycle. Their flexibility in processing natural language and their embedded knowledge lower the barrier to initiating threat modeling activities.

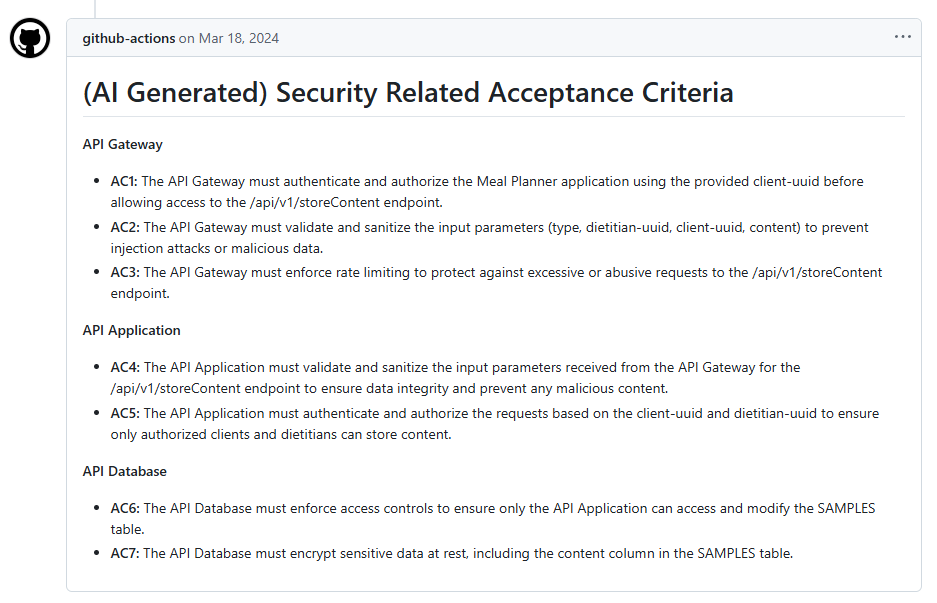

Ultimately, the goal of threat modeling is not just to produce a document, but to drive concrete actions:

The goal of threat modeling is to identify and mitigate threats to the system.

In essence, we need to provide developers with actionable guidance on what to implement or fix. My past work on a GitHub Action generating security acceptance criteria for user stories (see full example here) hinted at this ideal.

But can current LLMs consistently deliver such actionable, context-aware security requirements? The path forward likely involves several interconnected developments:

- Bigger and Better Models: The dream of a single, massive LLM that consumes an organization’s entire context (code repositories, issue trackers, documentation) to provide consistently reliable security insights remains largely aspirational. My own experience, including recent tests with tools like Microsoft Copilot for enterprise search, indicates that even advanced models struggle with accuracy and consistency when faced with vast, diverse internal datasets.

- Specialized and Embedded Models: An alternative, perhaps more pragmatic, approach involves smaller, faster, and more specialized LLMs. These could excel at the decomposed prompting strategies mentioned earlier and become integral components of existing developer tools (e.g., Jira, Confluence, GitHub). Such models could continuously analyze specific contexts (like a user story or a code commit) and generate ‘intermediate’ security-relevant data or suggestions, potentially fine-tuned on company-specific standards and previously identified issues to improve relevance.

- Multi-Agent Systems: The concept of multi-agent systems, where different AI agents specialize in sub-tasks (e.g., one agent analyzes code, another queries documentation, a third synthesizes findings), could enhance reliability, similar to how Chain-of-Thought prompting improves reasoning in single models. However, orchestrating these agents effectively and avoiding context overload for the synthesizing LLM (given its current reasoning limitations) remains a significant challenge. The risk is that an agent tasked with comprehensive information gathering might overwhelm the core LLM, diminishing the quality of the final threat model.

However, even with advancements in these areas, a crucial gap will likely persist: the “unwritten context”. So much vital information for comprehensive threat modeling: business priorities, risk appetite, nuanced architectural decisions made in informal discussions, evolving team dynamics, etc. resides outside formal IT systems. Security professionals synthesize this by observing, discussing, and understanding the broader organizational landscape. Until we can effectively capture and integrate this vast, unstructured human context (from meetings, emails, informal chats), the human expert will remain indispensable in the threat modeling process.

Refined Expectations for LLMs in Threat Modeling (Mid-2025)

Based on my research, here are my current views on leveraging LLMs for threat modeling:

- ⭐️⭐️ Learning Aid: LLMs help flatten the learning curve for threat modeling newcomers.

- ⭐️⭐️ Idea Generation: LLMs excel as inspiration tools. Developers can use them to create initial threat models before consulting security specialists. Matthew Adams highlights this approach in his STRIDE GPT presentation.

- ⭐️ Rule-Based Alternative: LLMs might replicate deterministic tools like

pytmwhen using a decomposed approach with smaller, focused prompts. Think of LLMs as having limited brain. They handle simple tasks reasonably well but struggle with complex reasoning that requires maintaining numerous dependencies. While they occasionally produce surprisingly good results (much like our pets can surprise us!), consistent performance remains elusive. - ⭐️⭐️ Context Enrichment: LLMs can identify threats and risks implied by context. It’s something deterministic tools cannot do. This capability extends beyond threat modeling to vulnerability research, as discussed in my previous post: Can AI Actually Find Real Security Bugs? Testing the New Wave of AI Models

- ⭐️ Domain-Specific Training: Training LLMs on architecture examples paired with expert-created threat models could improve their generalization capabilities. Threat modeling is a verifiable domain where we can score model performance and incorporate feedback into the learning process.

- ❌ Full Automation: I remain skeptical about using LLMs to completely automate threat modeling in a way it will be presented to developers as acceptance criteria. At least at this stage.

Conclusion

After reviewing dozens of AI-generated threat models over the past six months, I’ve started to feel a bit of burnout 😅.

How do I balance my doubts with the strong results from Gemini 2.5 Pro Preview? While the model performed exceptionally well on my test case, I still wonder whether that performance will hold up across a wide range of real-world scenarios. A comprehensive benchmark - like the one mentioned earlier - could help answer that.

To be clear: LLMs can significantly enhance threat modeling workflows today. We don’t need to wait for newer, more powerful models. What we do need is a solid understanding of their current limitations and a thoughtful approach to designing workflows around them.

If you’re already successful using tools like pytm or other structured approaches to threat modeling, you’ll likely find LLM-based automation helpful. But if you struggle with applying a rigid methodology, an LLM won’t be a silver bullet.

Thanks for reading! You can contact me and/or follow me on X and LinkedIn.